Modifying a virtual environment in just a few clicks

Creating and modifying a virtual reality environment just got a lot easier with the software developped by the spin-off Imverse© 2018 Imverse

Creating and modifying a virtual reality environment just got a lot easier thanks to software being released today by Imverse, an EPFL spin-off. The secret behind Imverse’s program, which works much like a photo editor, is a three-dimensional rendering engine based on 3D pixels called voxels. The rendering engine can be used for other virtual reality applications as well, such as depicting real people. The startup’s initial target market is the movie and video game industry.

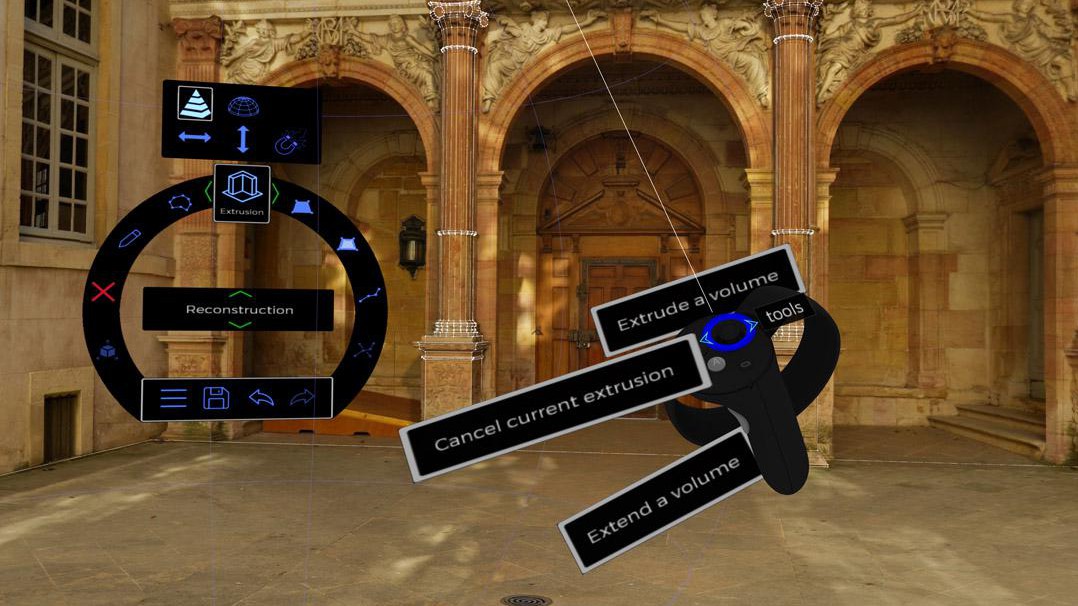

Wearing a virtual reality headset and using manual controllers, you can use Imverse’s new software to select tools that let you bring depth, cut, paste, paint and zoom in and out – much like in a photo editor. Your virtual environment can therefore respond to your creative flourishes, even from a 2D or 360 photo: in an instant, your surroundings can change as you push back walls, create space here and there, add furniture and experiment with colors. The beta version of this software program will be available online starting today.

Creating a 3D scenario ten times faster

Imverse’s time-saving technology could come in handy in a wide range of fields, such as real estate, architects, graphic designers, decorators and photgraphers – engineers could even use it for certain kinds of modeling. But the EPFL spin-off is initially setting its sights on the movie, medias and video game industry, where its software could be used for such tasks as sketching out scenarios in 3D, previewing movie sets and how they could change during a film and mapping out the locations of cameras and actors. “Producers can use it to take a photo of a real set, convert the 360 degrees image into a 3D environment and then, using virtual reality, edit it in order to visualize the scene before they film it. And they’ll be able to do these things ten times faster than with conventional programs,” says Javier Bello, the startup’s co-founder and CEO.

Setting its sights on the movie industry from the start may seem ambitious, but the company has done its homework. Imverse’s founders have already begun feeling out the industry and making contacts, including at Sundance – the largest independent film festival in the US – early this year, and then later at the Cannes Film Festival. “Our first target market is the entertainment industry, because people who work there are already familiar with the types of software used for visual effects and motion capture. They can quickly grasp how our program can simplify their work and save them time,” adds Bello. What’s more, going after the movie and game industry will put them into contact with heavyweights like Intel, Microsoft and Oculus.

A voxel-based, high-potential technology

Imverse is also aiming high for another reason: the technology on which its software is based can be used for many other applications. The company’s powerful voxel-based rendering engine obviates the need for those notorious polygon grids so familiar to video-game and animated-film makers. Voxels are not new, but until recently they required too much computing power to be feasible for real-time rendering.

“This technology was developed over the past 12 years at EPFL’s Laboratory of Cognitive Neuroscience, for use in neurology research. It lets us create smarter tools, processes and approaches for connecting hardware and software,” says Bello, a virtual reality expert. Bello, together with colleague and co-founder Robin Mange, sees broader implications – and thus a particularly bright future – for their technology. “And not just in virtual reality – we started with that field to show the high level of precision it can attain,” he adds.

Shaking a friend’s hand in the real and virtual worlds at the same time

The startup is also hard at work on bringing real-time representations of users’ bodies – and of other people around – into the virtual reality environment. Their mixed-reality technology has already been used by an artist to make a short film called Elastic Time. They showcased their system at Sundance, giving industry professionals a chance to try it out. “The ability for people to interact in real time in both the real and virtual worlds will lead to ever more possibilities,” concludes Bello.