Enabling next generation brain simulations with performance modelling

© 2020 EPFL

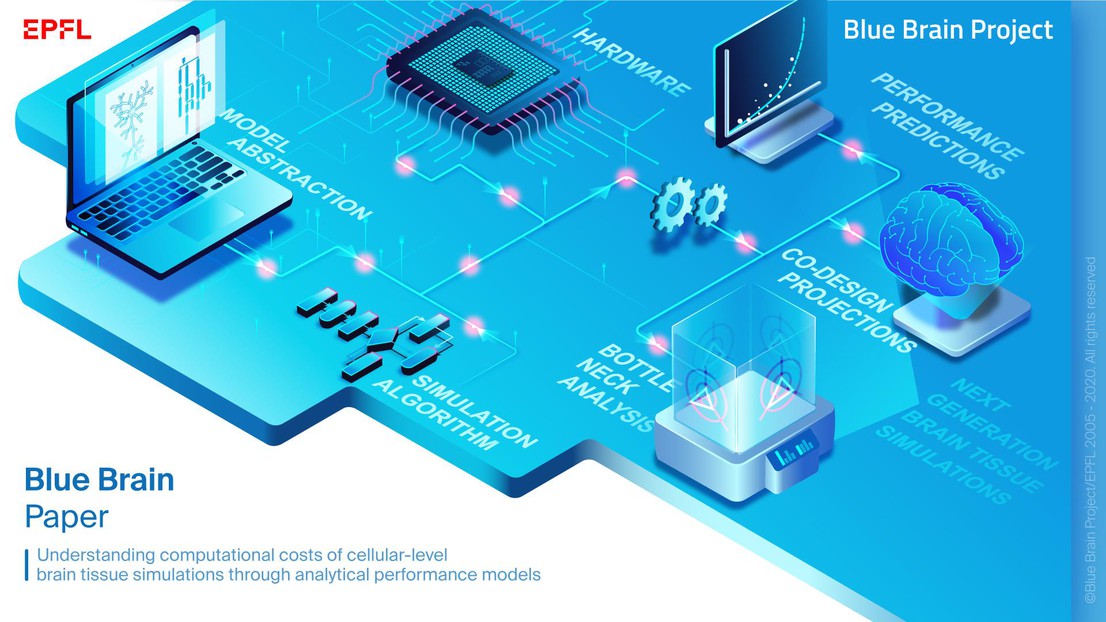

For the first time, scientists at the EPFL Blue Brain Project, a Swiss brain research initiative, have extended performance modelling techniques to the field of computational brain science resulting in findings that are useful for today and indispensable for the future. In a paper published in Neuroinformatics, they provide a quantitative appraisal of the performance landscape of brain tissue simulations, and analyze in detail the relationship between an in silico experiment, the underlying neuron and connectivity model, the simulation algorithm and the hardware platform being used. Thereby deriving the first analytical performance models of detailed brain tissue simulations, which is a concrete step to enable the next generation of brain tissue simulations.

Understanding the brain is one of the biggest ‘big data’ challenges facing us today and increasingly, computational modelling and simulation have become essential tools in the quest to better understand the brain’s structure and to decipher the causal interrelations of its components. General-purpose computing has, until now, largely been able to deliver high performance in simulating brain tissue but at the same time, it is becoming more difficult to rely on future generations of computers due to physical limitations. If whole brain models are to be achieved on future computer generations, understanding models’ and simulation engines’ computational characteristics will be indispensable.

To date, in order to simulate brain tissue, the sheer breadth of biochemical and biophysical processes and structures in the brain has led to the development of a large variety of model abstractions and specialized tools, several of which run on the largest computers in the world. However, their advancement has mostly relied on performance benchmarking, i.e. the art of measuring and optimizing a software and algorithmic approach to a given hardware, and only in some cases has used initial model-based extrapolation.

Insights using analytical performance modelling

In order to go beyond the benchmarking approach and previously published initial extrapolations, Blue Brain scientists have used a complementary approach: detailed performance modelling - the science of actually modelling the performance of a brain tissue model, the algorithm used and simulation scheme intersected with a specific computer architecture on where it is run. Using a hybrid grey-box model that combines biological and algorithmic properties with hardware specifications, the scientists identified performance bottlenecks under different simulation regimes, corresponding to a variety of prototypical scientific questions that can be answered by simulations of biological neural networks. The analysis was based on a state-of-the-art high performance computing (HPC) hardware architecture and applied to previously published neural network simulations (the Brunel model, the original and a simplified version of the Blue Brain Project’s Reconstructedmicrocircuit) that were selected to represent important neuron models in the literature.

Francesco Cremonesi, the first author of the study, explains, “For the first time, we have applied advanced analytical and quantitative performance modelling techniques to the field of cellular-level brain tissue simulations. This has allowed us a deep insight into which types of models require which type of hardware and what we should do to improve their efficiency (and thus simulate larger models faster on the same hardware). We believe this is an important contribution to the community that sometimes compares brain tissue models solely on the number of neurons and synapses, but ignores the large differences in computational costs depending on the level of detail of the models,” he concludes.

An important breakthrough for navigating the uncertain future in computing

With Moore’s law1 slowing down and possibly even ending in the not too distant future, it is becoming of the utmost importance to understand which hardware properties are rate limiting a computation now and on future systems, and which problems may not be solvable if new methods, hardware etc. are not identified. Therefore, this detailed insight on which part of a computer system (e.g. memory bandwidth, network latency, processing speed etc.) is most relevant for solving a scientific problem is indispensable for the future as it provides a quantitative and systematic framework in which these future developments can be prioritized.

Prof. Felix Schürmann, senior author of the study, explains, “This work provides a long necessary formal framework that enables a quantitative discussion between in silico modelers, high performance software researchers and hardware architects”. He continues, “The methodology developed in this work constitutes a quantitative means through which these scientific communities can collaborate in the task of designing and optimizing future software and hardware for the next generation of brain tissue simulations”.

Click here to read the paper.

Cremonesi, F., Schürmann, F., Understanding computational costs of cellular-level brain tissue simulations through analytical performance models, Neuroinformatics, Jan 2020

For more information, please contact Blue Brain Communications Manager – [email protected]

1 Moore’s law describes an initial observation and later on a self-fulfilling prophecy of the exponential growth of transistors crammed onto silicon chips, and which has been a key enabler for an unparalleled performance growth in computing power over many decades and which has fueled countless scientific and economic developments

This study was supported by funding to the Blue Brain Project, a research center of the École polytechnique fédérale de Lausanne (EPFL), from the Swiss government’s ETH Board of the Swiss Federal Institutes of Technology.

Acknowledgements

The authors gratefully acknowledge the compute resources and support provided by the Erlangen Regional Computing Center (RRZE), and in particular Georg Hager for the fruitful discussions regarding the ECM model and the interpretation of performance predictions.