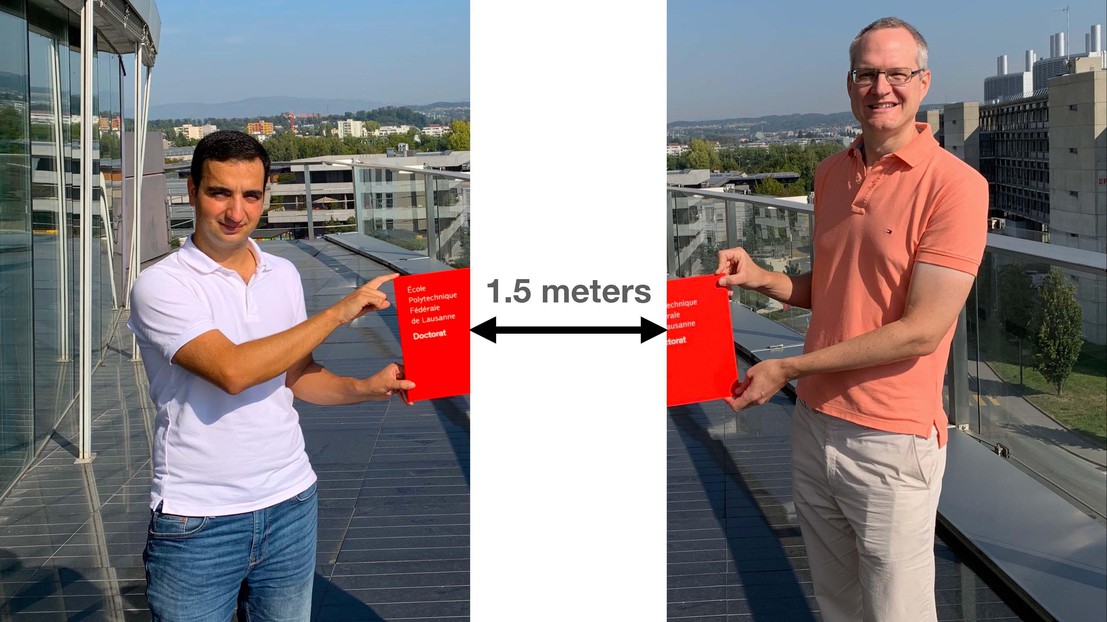

Congrats to Dr. Soroosh Shafieezadeh-Abadeh for obtaining his PhD

Dr. Soroosh Shafieezadeh-Abadeh & Prof. Daniel Kuhn

Dr. Soroosh Shafieezadeh-Abadeh obtained his PhD in June 2020. His dissertation, supervised by Prof. Daniel Kuhn, is entitled "Wasserstein Distributionally Robust Learning".

Abstract

Many decision problems in science, engineering, and economics are affected by uncertainty, which is typically modeled by a random variable governed by an unknown probability distribution. For many practical applications, the probability distribution is only observable through a set of training samples. In data-driven decision-making, the goal is to find a decision from the training samples that will perform equally well on unseen test samples. In this thesis, we leverage techniques from distributionally robust optimization to address decision-making problems in statistical learning, behavioral economics and estimation problems. In particular,Wasserstein distributionally robust optimization is studied where the decision-maker learns decisions that perform well under the most adverse distribution within a certain Wasserstein distance from a nominal distribution constructed from the training samples. We show that the robust decisions can be computed very efficiently by solving tractable convex optimization problems with rigorous out-of-sample guarantees.

In the first part of the thesis we study regression and classification methods in supervised learning from the distributionally robust perspective. In the classical setting the goal is to minimize the empirical risk, that is, the expectation of some loss function quantifying the prediction error under the empirical distribution. When facing scarce training data, overfitting is typically mitigated by adding regularization terms to the objective that penalize hypothesis complexity. We introduce new regularization techniques using ideas from distributionally robust optimization, and we give new probabilistic interpretations to existing techniques. Specifically, we propose to minimize the worst-case expected loss, where the worst case is taken over the ball of all (continuous or discrete) distributions that have a bounded transportation distance from the (discrete) empirical distribution. By choosing the radius of this ball judiciously, we can guarantee that the worst-case expected loss provides an upper confidence bound on the loss on test data, thus offering new generalization bounds.

We prove that the resulting regularized learning problems are tractable and can be tractably kernelized for many popular loss functions. The proposed approach to regularization is also extended to neural networks.

In the second part of the thesis we consider data-driven inverse optimization problems where an observer aims to learn the preferences of an agent who solves a parametric optimization problem depending on an exogenous signal. Thus, the observer seeks the agent’s objective function that best explains a historical sequence of signals and corresponding optimal actions. We focus here on situations where the observer has imperfect information, that is, where the agent’s true objective function is not contained in the search space of candidate objectives, where the agent suffers from bounded rationality or implementation errors, or where the observed signal-response pairs are corrupted by measurement noise. We formalize this inverse optimization problemas a distributionally robust program minimizing the worst-case risk that the predicted decision (i.e., the decision implied by a particular candidate objective) differs from the agent’s actual response to a random signal. We show that our framework offers rigorous out-of-sample guarantees for different loss functions used to measure prediction errors and that the emerging inverse optimization problems can be exactly reformulated as (or safely approximated by) tractable convex programs when a new suboptimality loss function is used.

In the final part of the thesis we study a distributionally robust mean square error estimation problem over a nonconvex Wasserstein ambiguity set containing only normal distributions. We show that the optimal estimator and the least favorable distribution forma Nash equilibrium. Despite the nonconvex nature of the ambiguity set, we prove that the estimation problem is equivalent to a tractable convex program.

We further devise a Frank-Wolfe algorithm for this convex program whose direction searching subproblem can be solved in a quasi-closed form. Using these ingredients, we introduce a distributionally robust Kalman filter that hedges against model risk.

Wasserstein Distributionally Robust Learning, Soroosh Shafieezadeh-Abadeh, Daniel Kuhn (Dir.), 2020, EPFL, Lausanne.