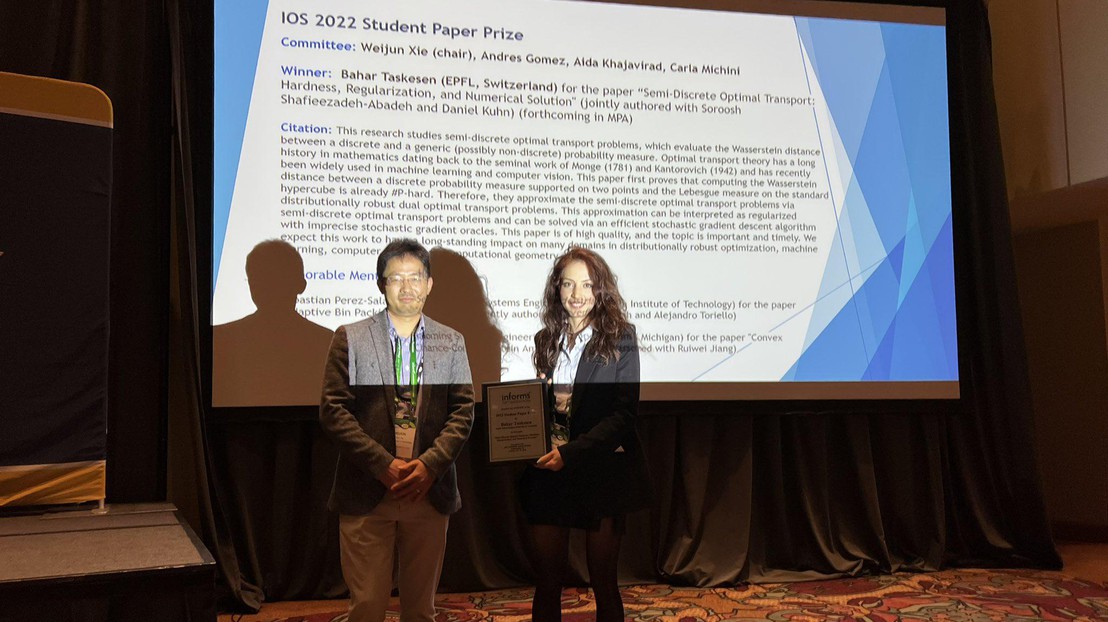

Bahar Taskesen wins INFORMS Optimization Society Student Paper Prize

© 2022 EPFL

At the INFORMS Annual Meeting 2022 Bahar Taskesen, PhD student of Daniel Kuhn, received the INFORMS Optimization Society Best Student Paper Award for her paper "Semi-Discrete Optimal Transport: Hardness, Regularization, and Numerical Solution" (co-authored with Soroosh Shafieezadeh-Abadeh and Daniel Kuhn, forthcoming in Mathematical Programming).

With over 12,500 members from around the globe, INFORMS is the leading international association for professionals in operations research, analytics, management science, economics, behavioral science, statistics, artificial intelligence, data science, applied mathematics, and other relevant fields. The INFORMS Optimization Society Student Paper Prize, established in 2006 and administered by the INFORMS Optimization Society, is awarded annually to one (or more) students for the most outstanding paper in optimization that is submitted to and received, or published in a refereed professional journal within the three calendar years preceding the year of the award. The prize serves as an esteemed recognition of promising students who are looking for an academic or industrial career.

Abstract:

Semi-discrete optimal transport problems, which evaluate the Wasserstein distance between a discrete and a generic (possibly non-discrete) probability measure, are believed to be computationally hard. Even though such problems are ubiquitous in statistics, machine learning and computer vision, however, this perception has not yet received a theoretical justification. To fill this gap, we prove that computing the Wasserstein distance between a discrete probability measure supported on two points and the Lebesgue measure on the standard hypercube is already #P-hard. This insight prompts us to seek approximate solutions for semi-discrete optimal transport problems. We thus perturb the underlying transportation cost with an additive disturbance governed by an ambiguous probability distribution, and we introduce a distributionally robust dual optimal transport problem whose objective function is smoothed with the most adverse disturbance distributions from within a given ambiguity set. We further show that smoothing the dual objective function is equivalent to regularizing the primal objective function, and we identify several ambiguity sets that give rise to several known and new regularization schemes. As a byproduct, we discover an intimate relation between semi-discrete optimal transport problems and discrete choice models traditionally studied in psychology and economics. To solve the regularized optimal transport problems efficiently, we use a stochastic gradient descent algorithm with imprecise stochastic gradient oracles. A new convergence analysis reveals that this algorithm improves the best known convergence guarantee for semi-discrete optimal transport problems with entropic regularizers.